I would love students and staff to be able to bring their own devices and securely connect to our network without configuration and IT support, which has been impossible in the past. We have considered scenarios of users installing clients and configuring settings but all of these have potential problems. What happens if an end user breaks their personal system while trying to connect to our network? Who is responsible for the support?

Enter the idea of a Linux bootable USB stick with the Citrix client preloaded, no user settings are ever changed and all configuration is taken care of. I have chosen Ubuntu 10.04 LTS as my OS of choice as it is flexible, easy to configure and is known to boot well from a USB.

For this to work in our environment, it needs to boot up, connect to the network and launch a Citrix login prompt automatically. As I don't want to open any "public" networks at this stage, I am using a secondary wireless network I already have in production, this network requires WPA2-Enterprise authentication. This will add some extra complexity to my set-up as I will need to load certificates into my image and configure WPA_Supplicant to automatically connect to my network using those certificates.

I am also going to load the latest Adobe Flash package and of course the Citrix client package.

Finally I am going merge back the casper-rw persistent changes into the live boot USB's squash file system so the USB's can be reused over and over again without being re-imaged.

Prerequisites

- The Ubuntu 10.04 iso

- Any required CA, private keys and user certificates

- An internet connection

- An empty USB stick (primary)

- A second USB stick for storing the created file (secondary)

Lets get into it!

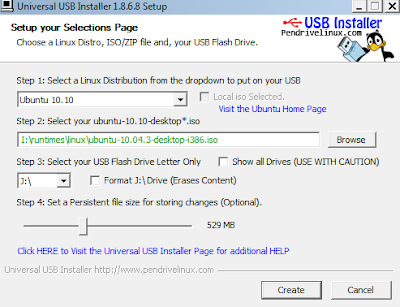

1. Copy Ubuntu onto the primary USB stick, I won't include a tutorial here, but the Ubuntu site has great tutorials. I used their Windows based Universal USB Installer tutorial. Ensure you create the USB stick with a persistent storage of at least 512MB. This "persistent storage" allows us to make changes that are kept after a reboot.

2. Boot Ubuntu off the primary USB stick.

3. Start by setting the wallpaper you want, I have set a wallpaper that says "Please be patient as a connection is established..."

4. If you are connecting to an open wireless network you can simply configure it in Network Manager and let it take care of the rest, but WPA2-Enterprise networks are slightly different. If you are using WPA2-Enterprise authentication then continue, otherwise you can skip to step 5.

Depending on your luck, the current humidity and if your shirt is purple or green, Network Manager may work with a WPA2-Enterprise network and your certificates or it may not. For this reason I use the more robust WPA_Supplicant and get my hands dirty on the command line.

First we need to remove Network Manager

apt-get remove network-managerNow lets configure WPA_Supplicant, first create a certificates folder under /etc/wpa_supplicant and give it the appropriate permissions.

sudo mkdir /etc/wpa_supplicant/certssudo chmod 700 /etc/wpa/supplicant/certs

Then copy your CA certificate, client certificate and client key in PEM format to the /etc/wpa_supplicant/certs directory. If your certificates have come from Active Directory in PFX format you will need to convert them to PEM. This can be a difficult step in the process, but the WPA_Supplicant.conf man pages has some great tips on this one. You can use the below command to convert PFX certificates to PEM, but anything more is outside the scope of this tutorial.

Converting your client certificate and private key

openssl pkcs12 -in example.pfx -out user.pem -clcerts

Converting PFX CA certificate

openssl pkcs12 -in example.pfx -out ca.pem -cacerts -nokeys

Next create a config file under /etc/wpa_supplicant called config.conf and enter the following information

network={

ssid="networkname"

key_mgmt=WPA-EAP

scan_ssid=1

eap=TLS

pairwise=CCMP TKIP

group=CCMP TKIP

identity="username@domain"

ca_cert="/etc/wpa_supplicant/certs/ca.pem"

client_cert="/etc/wpa_supplicant/certs/client.pem"

private_key="/etc/wpa_supplicant/certs/client-key.pem"

private_key_passwd="test"

}

As you can see from the above configuration you need to customize your SSID, thename of the certificates, identity and private_key_passwd if there is one.

5. Install the Adobe Flash and Citrix packages, both are available from their respective websites and both are very easy to install on Ubuntu (you should open them from within Firefox straight to the package manager which handles the rest).

6. Now open Firefox and set your home page as your Citrix address. I have my Citrix Access Gateway available in this wireless network so I set the address of my Access Gateway as the home page.

7. Now we are going to put a very simple bash script into our home folder called go, which reads as follows.

sudo killall -9 wpa_supplicant

sudo /sbin/wpa_supplicant -c /etc/wpa_supplicant/config.conf -iwlan0 -B

sudo /sbin/wpa_supplicant -c /etc/wpa_supplicant/config.conf -iwlan1 -B

sudo /sbin/dhclient wlan0

sudo /sbin/dhclient wlan1

/usr/bin/firefox

Notice I have listed wlan0 and wlan1, this is to cover the fact my target system might have totally different hardware (or multiple adapters) and by specifying two interfaces we are covering our bases (at least one should work).

This script will establish a wireless connection, grab a DHCP IP address and then start Firefox, which should open to your Citrix homepage.

8. If you are connecting to a SSL site (which I hope you are since this is on a "public" network) then we need to copy the Firefox trusted certificates into the Citrix store. If we don't perform this operating Citrix won't trust the SSL certificate on your site and will fail to launch a desktop.

sudo cp /usr/share/ca-certificates/mozilla/* /usr/lib/ICAClient/keystore/cacerts/If you are using a self generated or certificate generated by a private CA then your need to import the CA certificate into the Citrix store.

9. Lastly we need to set our go script to launch on user login.

Go to the System menu > Preferences > Startup Applications, then click "Add", Name it "Citrix" and in the command field enter "/home/ubuntu/go" and then click "Add".

10. Shut-down Ubuntu and the changes will be written to the persistent file (casper-rw in the root of the USB stick).At this point it might be worth re-booting into Ubuntu again to ensure it does indeed connect to the wireless network and launch Firefox with your Citrix login page before proceeding.

Additional customizations

We have done the bulk of the configuration, but we still need to make a few changes to the boot loader.

Lets edit the text.cfg to cut down on the options presented to the end user. The only option I want presented is the ability to boot the Ubuntu Live CD, this should help cut down on any potential accidents.

To do this insert the USB stick into your Windows system, open the /syslinux/text.cfg file and make it read as follows.

default liveWe can take this a step further by making a change to /syslinux/syslinux.cfg that will totally surpress the boot menu, but you don't need to do this if you don't want. If you want to remove the boot menu, just remove the following line from syslinux.cfg.

label live

menu label ^Run Ubuntu from this USB

kernel /casper/vmlinuz

append noprompt cdrom-detect/try-usb=true persistent file=/cdrom/preseed/ubuntu.seed boot=casper initrd=/casper/initrd.lz splash --

default vesamenu.c32Save the changes and continue to the next section.

Merging the casper-rw changes back

1. Open the primary USB stick in windows and rename casper-rw to casper1

2. Boot Ubuntu from the USB stick again, you will notice when you get back into Ubuntu the changes are all missing, don't worry, we have done that on purpose.

3. Install the mksquashfs package, this will allow us to re-create the squash file system with our merged changes.

sudo apt-get install squashfs-tools4. We need to make some temporary directories and mount the files we plan to merge. The following commands will create the temporary directories, mount the persistent changes file, mount the read-only operating system file and then overlay them both in the /tmp/tmp-squash directory.

cd /tmp5. Insert your secondary USB drive. My USB is named "USB" so it mounted under /media/USB/

mkdir -p tmp-squash tmp-rw tmp-sqfs

sudo mount -o loop /cdrom/casper1 tmp-rw

sudo mount -o loop /cdrom/casper/filesystem.squashfs tmp-sqfs

mount -t aufs -o br:tmp-rw:tmp-sqfs none tmp-squash

6. There is one last configuration change we need to make before we write the changes back and that is to remove the "Install Ubuntu 10.04" icon from the Desktop. We don't want users accidentally installing Ubuntu over their current operating system.

rm -f /tmp/tmp-squash/home/ubuntu/Desktop/Install*

6. Now we need to "squash" the contents of these folders into a single file. This will mean when we boot this USB in the future, the changes we previously made are always present and reset after every reboot.

sudo mksquashfs tmp-squash /media/USB/filesystem.squashfs

7. When the process is complete shut-down Ubuntu and move back to your Windows machine and insert both USB sticks.

8. On the primary USB drive you can remove the casper1 file, it might be worth backing up in case you want these changes in the future.

9. Copy the filesystem.squashfs file you created in step 6 from the root of the secondary USB to the /casper folder on the primary USB. You should be prompted to override the file, click yes.

You all done! You now have a read-only boot-able Linux based Citrix client that should work on a large number of devices. I have tried 5 devices in my network and they all work beautifully.

You can now image multiple Ubuntu USB flash drives and copy your custom filesystem.squashfs to make them instant Citrix access drives.